It's hard to believe how much can change in a year.

In 2021, there are more than 100 public and venture-backed startups with the same mission, competing with NVIDIA to produce the fast chips needed to create and run artificial intelligence (AI). Fast forward to 2023, now that many companies are struggling to gain market traction or get enough capital to stay in business, part of the problem is undoubtedly the global economy; Many AI adopters and investors don't have the resources or courage to give the new chips a chance. But the real culprit is NVIDIA; They have turned out to be much harder than many companies and their investors thought.

So why does Toronto-based startup Tenstorrent remain so competitive, and are they any different? Why should we believe that Tenstorrent can succeed where so many struggle and even fail? This post will explore how Tenstorrent differentiates itself from dozens of other startups in terms of leadership, strategy, and technology.

If an AI startup isn't scared, it won't be.

Do we really need another AI hardware startup? In the past five years, the industry has been flooded with more than 100 such companies. Some have gone out of business, realizing that NVIDIA's data center AI technology is unbeatable. As a result, investors have become more cautious. Virtually all of these companies are vying for NVIDIA's second source of AI data center processing for training and reasoning processing. Can they win? In our view, they should be excited if they can get a combined 10% of the data center AI pie over the next three years. Yes, NVIDIA is that good.

Entering the storm is Tenstorrent, a Toronto-based AI hardware startup with offices in the Bay Area, Austin and Tokyo, Japan. Over the past year, the company has begun to expand from its early days of research and development into a true mission-driven company, adding executives in marketing, sales, support and functional areas to the engineering talent the company has been hiring. The company has grown to more than 280 employees.

From a leadership perspective, legendary CPU designer (including at Apple, AMD, Tesla, and Intel) and early angel investor Jim Keller recently took on the role of CEO. Meanwhile, founder Ljubisa Bajic has returned to his role as an adviser. Olof Johansson serves as Vice President of Operating Systems and Infrastructure, and Mamoru Nakano serves as Head of Japan Sales. Now, Raja Koduri, formerly of Intel and AMD, has just joined the board.

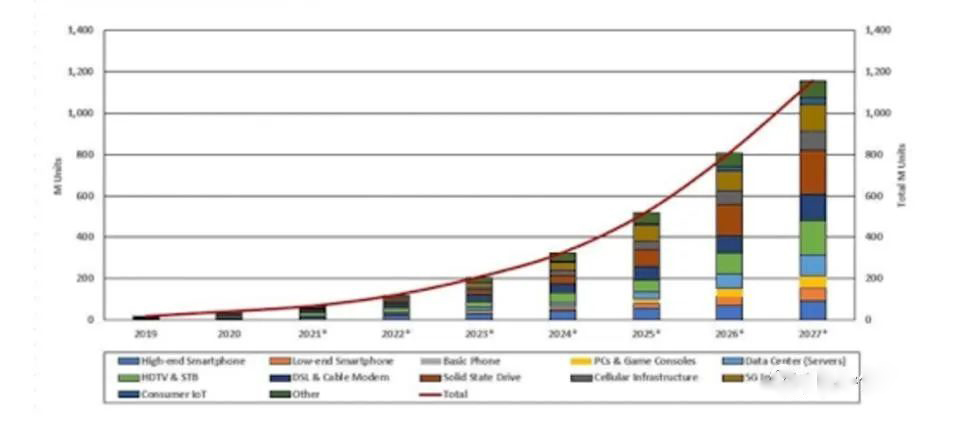

The emerging computing Environment: A rich opportunity

Data centers are growing rapidly as the move to cloud-based resources coincides with the move to HPCS and AI accelerators. NVIDIA's data center revenue has taken another hit recently due to the surge in interest in ChatGPT and the new AI battle between Microsoft and Google over the future of search and the applicability of AI in productivity applications. Last quarter, NVIDIA's data center sales reached $3.8 billion, up 31%. Markets and Markets expects the global data center accelerator market to reach $64 billion by 2027, with a CAGR of 24.7% over the forecast period.

At the same time, the CPU market is beginning to diverge significantly, with AMD's share of the data center market growing from 10.7% to 17.6% in the most recent quarter, while Intel has the upper hand. Arm, meanwhile, continues to grow, with Gartner predicting it will reach about 19 percent of server shipments by 2026.

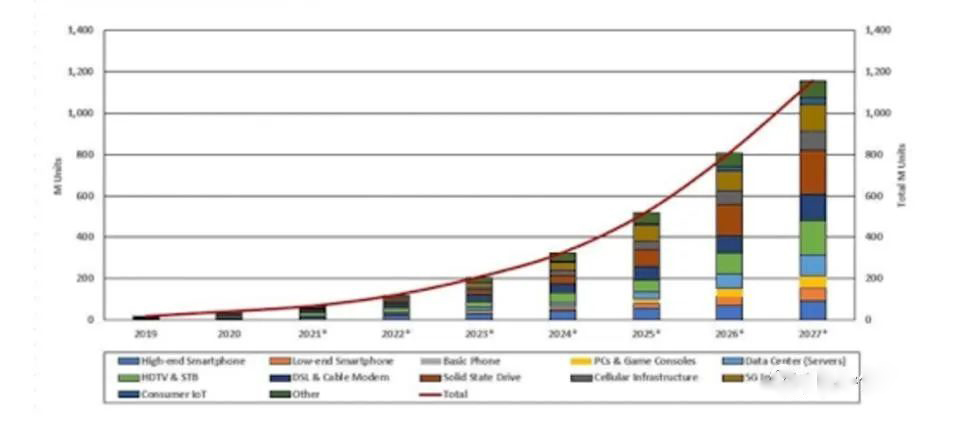

Now, there's the open source RISC-V, which is penetrating the microcontroller market and has ambitions in the data center. Semico Research forecasts that RISC-V will account for 25 billion AI SoCs by 2027, which is a big chip opportunity.

Looking at AMD and Intel, AI accelerator SoCs will increasingly be integrated on chips with CPU cores to perform scalar and administrative operations. Tenstorrent believes it should develop its own RISC-V kernel instead of relying on third parties. If Tenstorrent can provide superior RISC-V core, it could create a second revenue stream. So when combining a $64 billion AI accelerator with 25 billion RISC-V SoCs, the opportunity for Tenstorrent becomes even clearer.

The Tensorrent strategy

Jim Keller has worked closely with infrastructure buyers for many years; He knows what customers want. In short, they want an open AI computing platform that is easy to deploy at scale and offers lower TCO than current alternatives. Tenstorrent today has an AI chip, a road map for the future that promises those benefits, and Mr. Keller's credibility and track record of delivering on it.

tenstorrent is different from other fields in that it has a higher probability of success. First, the company has a software strategy that encourages innovation in the open source community. Second, Tenstorrent is the only startup with an AI accelerator and RISC-V CPU design and ambition. Finally, Tenstorrent attracted a world-class engineering team, and the company is now led by perhaps the industry's best-known CPU designer, Jim Keller.

conclusion

While we wish we had a better understanding of the final product portfolio and revenue Tenstorrent could achieve, we believe the RISC-V and AI accelerator technologies offer performance and TCO advantages over many of the company's competitors. AMD, Intel, and NVIDIA have embraced the idea of combining Gpus and cpus; All three points to the memory and throughput advantages that combinations can provide. These advantages are of great significance to meet the training and reasoning requirements of large language models. Tenstorrent is the only startup we know of that can do this level of technology integration.

Making the best RISC-V kernels available to big buyers as IP or small chips can create another revenue stream or an attractive exit strategy. So when someone asks us who looks good enough to compete in this new world, we always point to Tenstorrent as the likely winner.

What chip is Jim Keller making?

Tenstorrent, a startup with industry icon Jim Keller at the helm, has assembled a stellar team of AI and CPU engineers with ambitious plans involving general-purpose processors and AI accelerators.

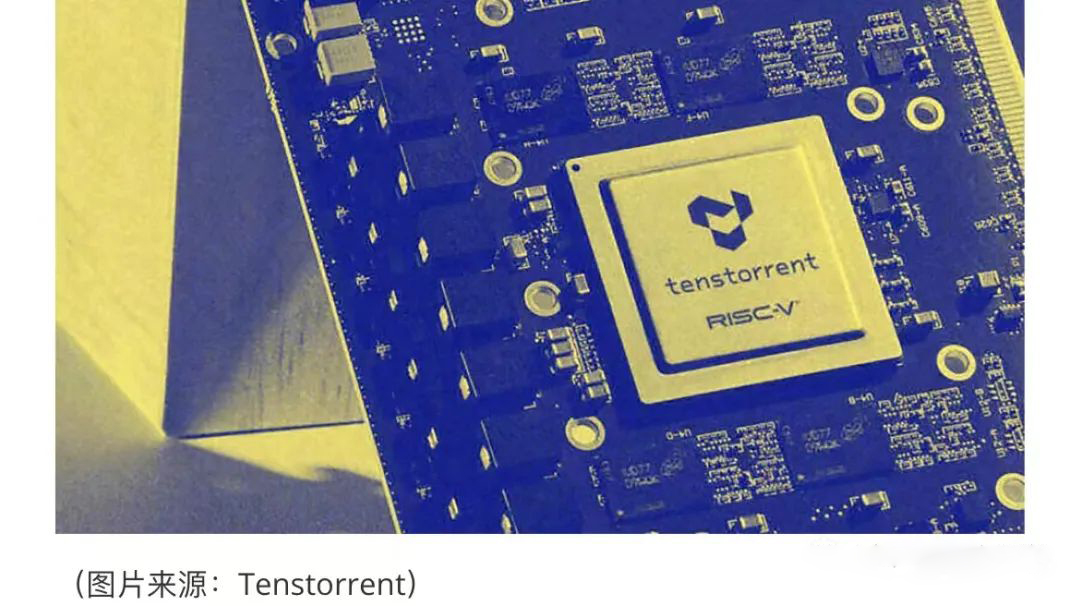

Currently, the company is working on the industry's first 8-width decoded RISC-V kernel capable of handling both client and HPC workloads, which will first be used in data center oriented 128-core high-performance cpus. The company also has a roadmap for multiple generations of processors, which we'll cover below.

Why RISC-V?

We recently spoke with Wei-Han Lien, Tenstorrent's lead CPU architect, about the company's vision and roadmap. Lien has an impressive background, having worked at NexGen, AMD, PA-Semi, Apple, and is perhaps best known for his work on Apple's A6, A7 (the world's first 64-bit Arm SoC), and M1 CPU microarchitecture and implementation.

The company has many world-class engineers with extensive experience in x86 and Arm design, and one might ask why Tenstorrent decided to develop RISC-V cpus, as this instruction set architecture (ISA) data center software stack is less comprehensive than x86 and Arm. The answer Tenstorrent gives us is simple: x86 is controlled by AMD and Intel, while Arm is controlled by Arm Holding, which limits the pace of innovation.

"There are only two major companies in the world that can produce x86 cpus," Wei-Han Lien said. "Because of x86 licensing restrictions, innovation is largely controlled by one or two companies. When companies get really big, they become bureaucratized and the pace of innovation [slows]. [...]. Arm is a bit like that. They claim they are like a RISC-V company, but if you look at their specifications, [it] gets so complicated. It is also actually somewhat led by an architect. [...]. Arm kind of dictates all possible scenarios, even architecture [licensing] partners."

RISC-V, by contrast, developed rapidly. According to Tenstorrent, because it is an open source ISA, it is easier and faster to innovate with it, especially when it comes to emerging and rapidly developing AI solutions.

"I was looking for a processor solution to go with [Tenstorrent's] AI solution, and then we wanted the BF16 data type, and then we went to Arm and said, 'Hey, can you support us? 'They said' no 'and it could take two years of internal discussions and discussions with partners and so on, "Lien explained. "But we talked to SiFive; They just put it there. So, there are no limits, they built it for us, it's free."

On the one hand, Arm Holding's approach ensures high-quality standards and a comprehensive software stack, but it also means a slower pace of ISA innovation, which can be a problem for emerging applications such as AI processors, which are designed to get off the ground quickly.

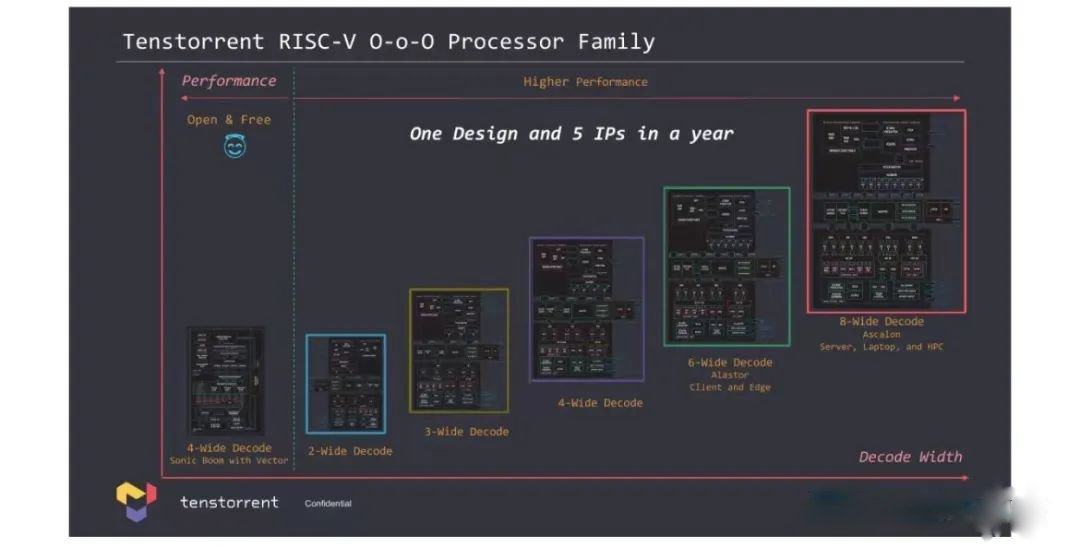

One microarchitecture, five CPU IP per year

Because Tenstorrent looks at and solves the entire AI application, it requires not only different system-on-chip or system-level packages, but also various CPU microarchitecture implementations and system-level architectures to achieve different power and performance goals. That's what Wei-Han Lien's department is trying to solve.

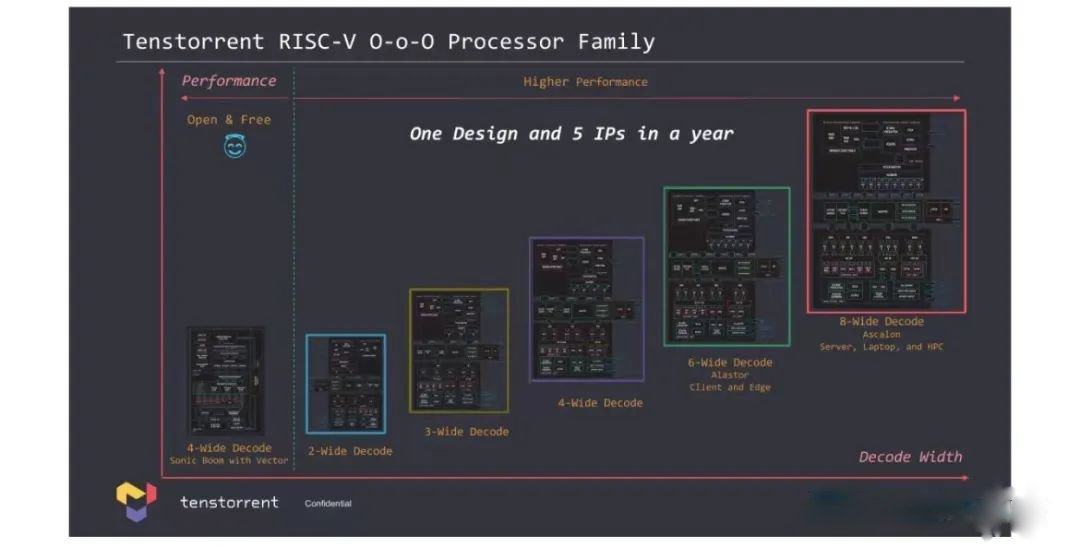

Humble consumer electronics SoCs and powerful server processors have little in common, but can share the same ISA and microarchitecture (implemented differently). That's where Lien's team comes in. Tenstorrent says the company's CPU team developed an out-of-order RISC-V microarchitecture and implemented it in five different ways to solve problems for a variety of applications.

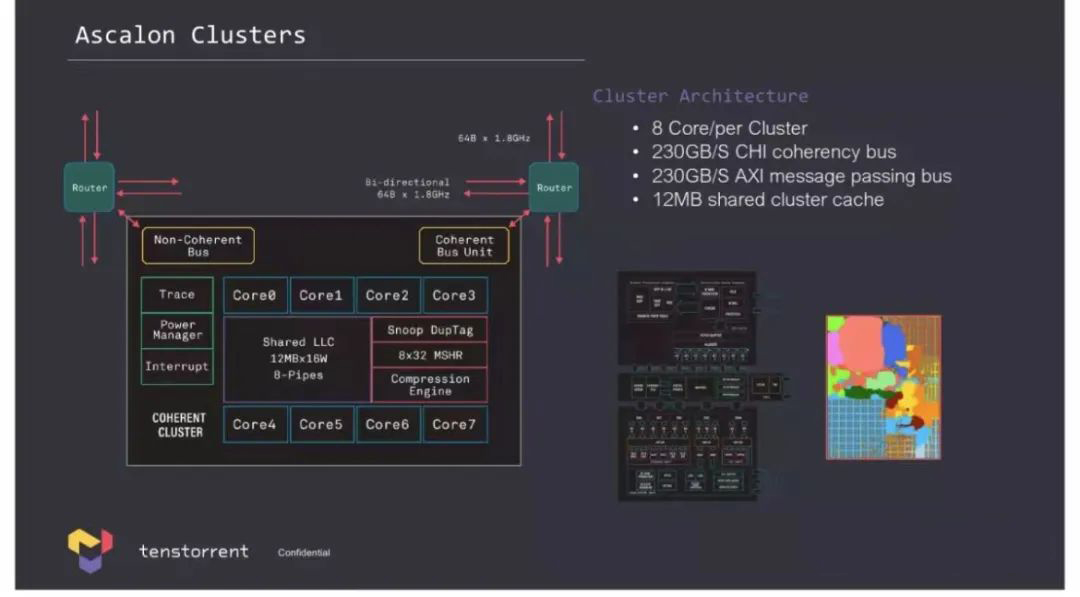

Tenstorrent now has five different RISC-V CPU core IP's - with two -, three -, four -, six - and eight-width decoders - for its own processors or licensed to interested parties. For potential customers who need a very basic CPU, the company can offer a small kernel with two width execution, but for customers who need higher performance edge, client PC, and high performance computing, it has six width Alastor and eight wide Ascalo cores.

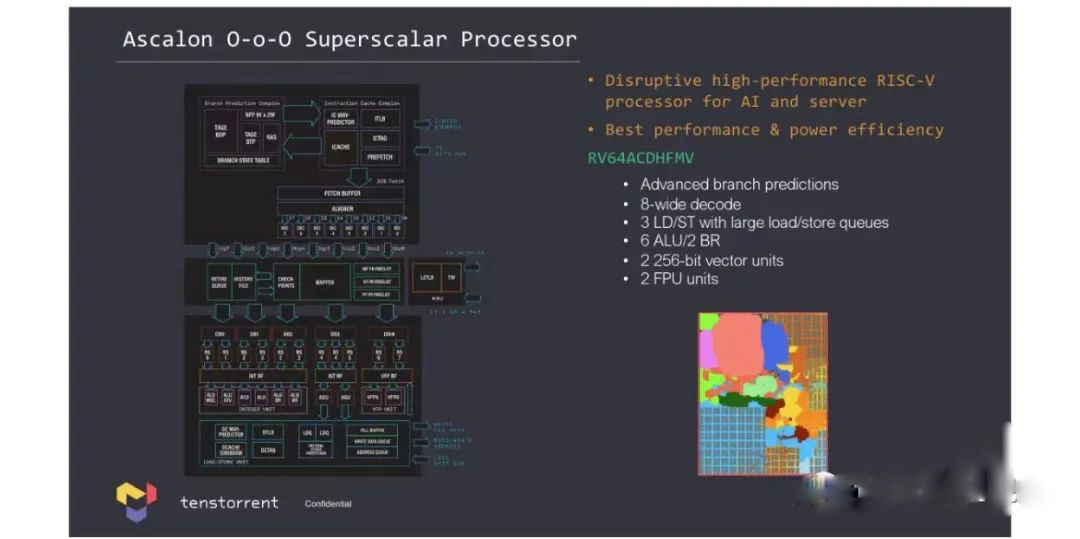

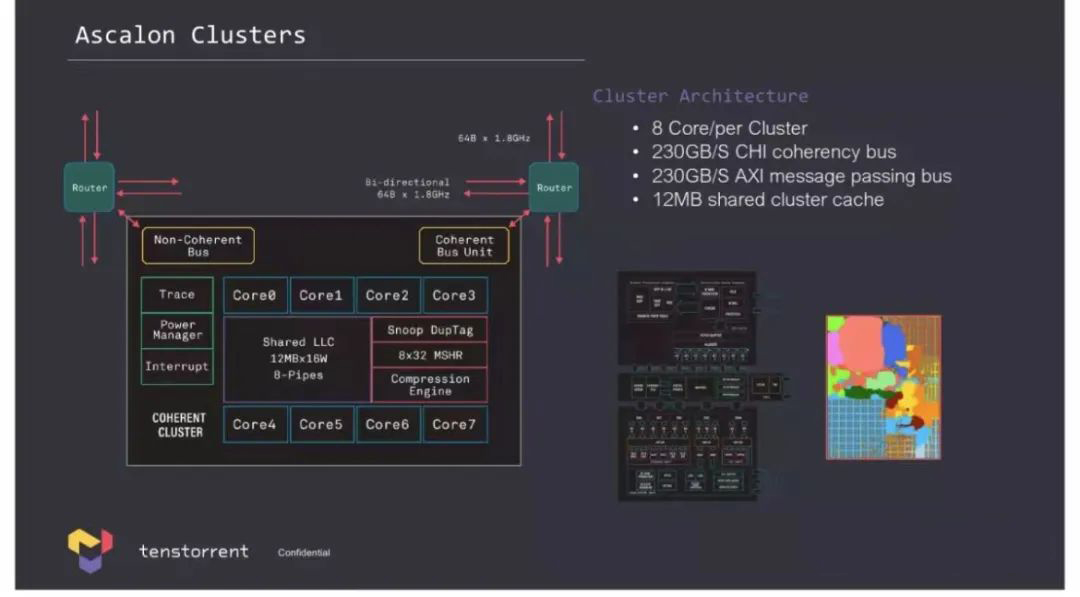

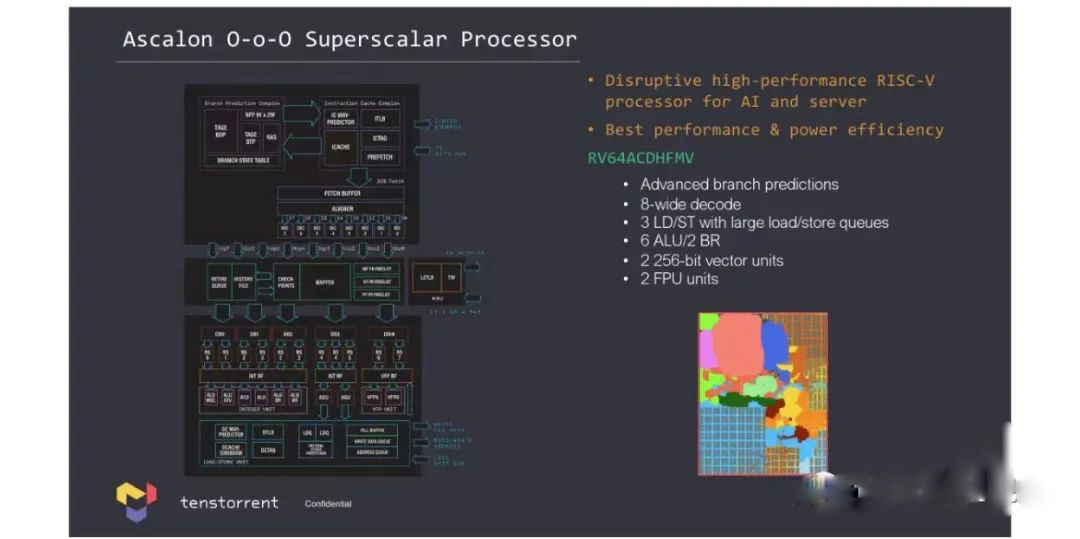

Each out-of-order Ascalon (RV64ACDHFMV) kernel with eight-bit decoding has six AlUs, two Fpus, and two 256-bit vector units, making it very powerful. Given that modern x86 designs use a four-wide (Zen 4) or six-wide (Golden Cove) decoder, we're looking for a very powerful kernel.

Wei-Han Lien is one of the designers responsible for Apple's "wide" CPU microarchitecture, which can execute up to eight instructions per clock. Apple's A14 and M1 SoCs, for example, feature eight-wide high-performance Firestorm CPU cores, and two years after their introduction, they remain among the most energy-efficient designs in the industry. Lien may be one of the best experts in the industry on "wide" CPU microarchitecture, and to our knowledge, he is the only processor designer to lead a team of engineers developing an eight-wide RISC-V high-performance CPU core.

In addition to a variety of RISC-V general purpose kernels, Tenstorrent has a proprietary Tensix kernel tailored for neural network reasoning and training. Each Tensix kernel contains five RISC kernels, an array math unit for tensor arithmetic, a SIMD unit for vector arithmetic, 1MB or 2MB of SRAM, and fixed function hardware for speeding up network packet arithmetic and compression/decompression. The Tensix kernel supports multiple data formats, including BF4, BF8, INT8, FP16, BF16, and even FP64.

Impressive road map

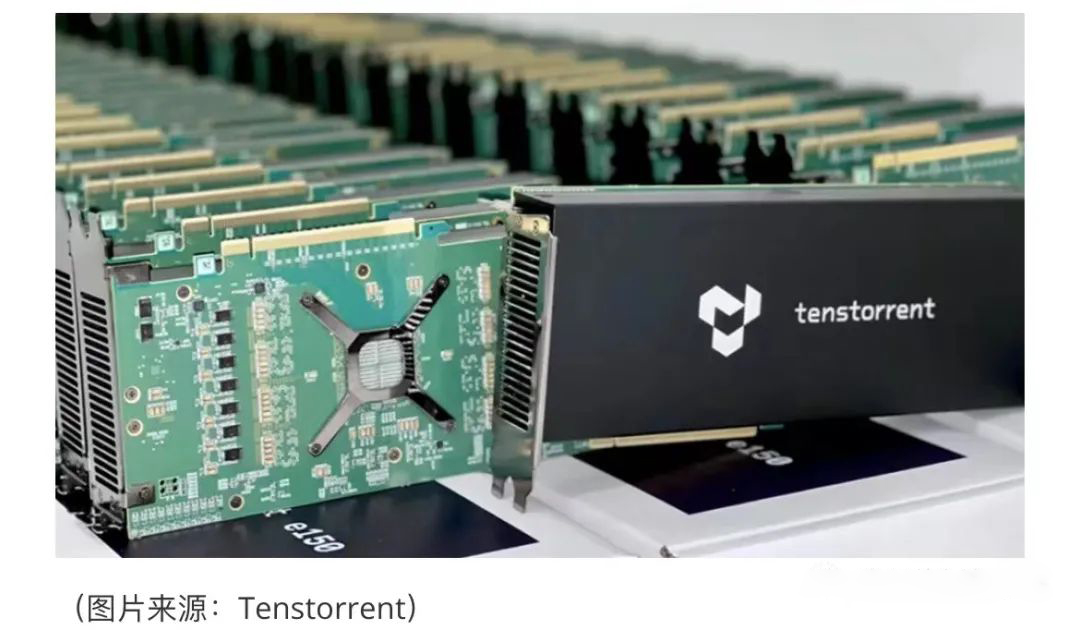

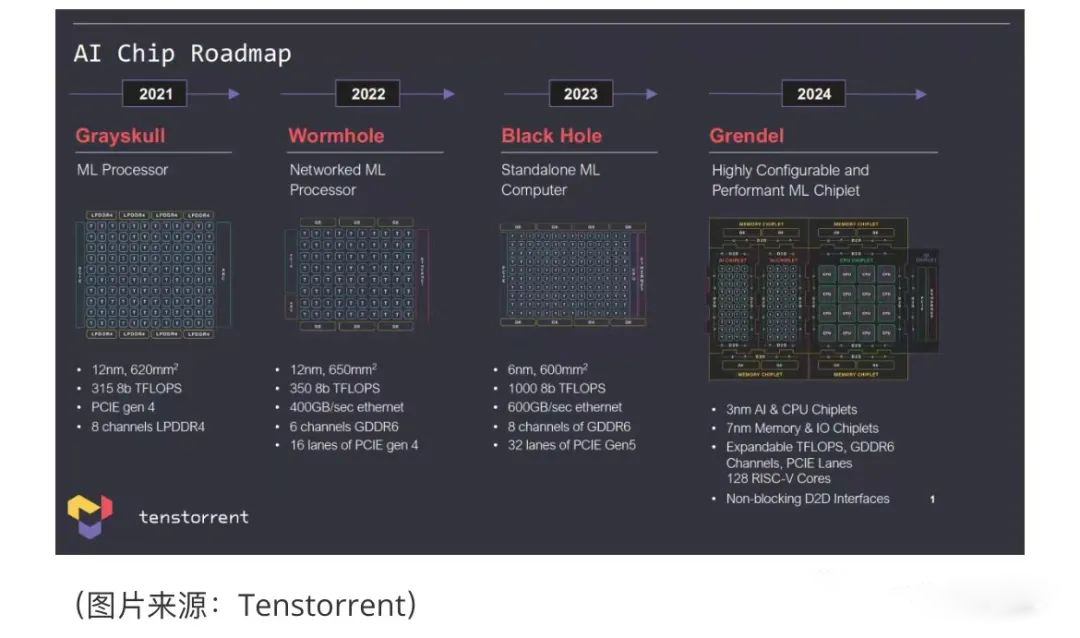

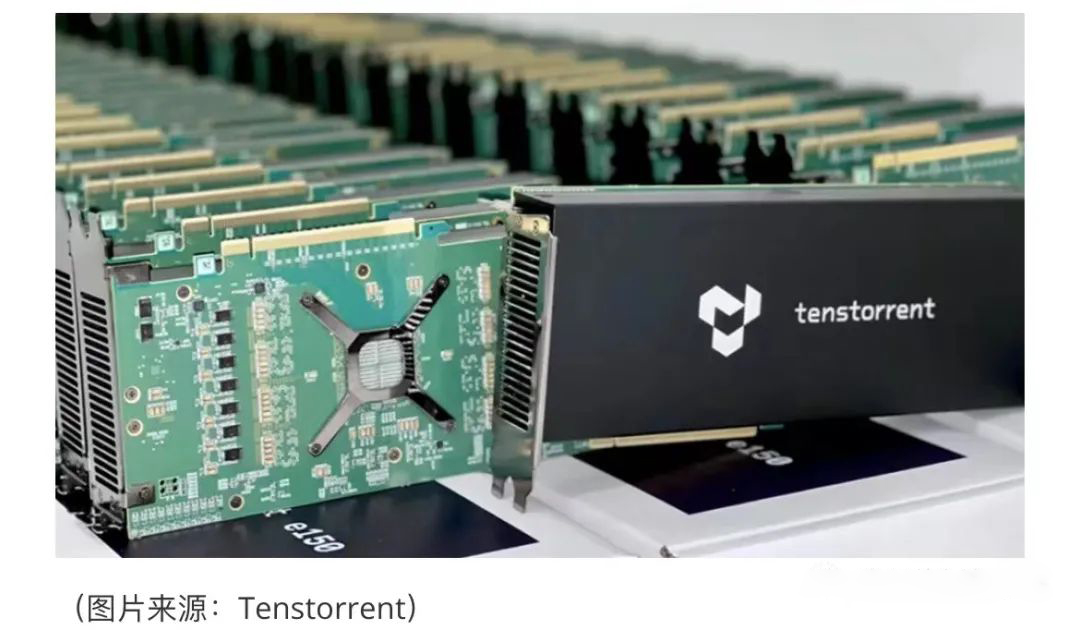

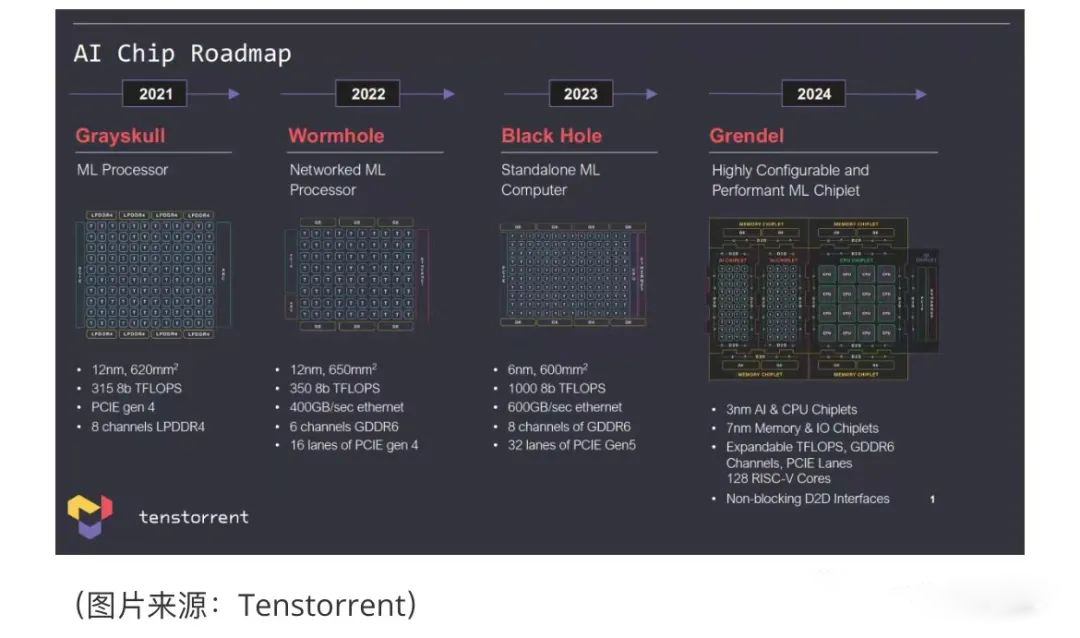

Currently, Tenstorrent has two products: A machine learning processor called Grayskull that provides about 315 INT8 TOPS performance that plugs into a PCIe Gen4 slot, and a network Wormhole ML processor that has about 350 INT8 TOPS performance and uses a GDDR6 memory subsystem, A PCIe Gen4 x16 interface and has 400GbE connections with other machines.

Both devices require a host CPU, which can be used as an add-on board or in a pre-built Tenstorrent server. A 4U Nebula server with 32 Wormhole ML cards provides the performance of about 12 INT8 POPS at 6kW.

Later this year, the company plans to launch its first standalone CPU+ML solution - Black Hole - combining 24 SiFive X280 RISC-V cores and multiple third-generation Tensix cores that interconnect learning workloads using two 2D torus networks that run in opposite directions from the machine. The device will provide computing throughput of 1 INT8 POPS (a roughly three-fold performance boost compared to its predecessor), eight GDDR6 memory channels, 1200 Gb/s Ethernet connectivity, and PCIe Gen5 channels.

In addition, the company looks forward to adding a 2TB/s die to die interface for dual-chip solutions as well as future use. The chip will use a 6nm class manufacturing process (we expect it to be a TSMC N6, but Tenstorrent has yet to confirm this), but at 600mm² it will be smaller than the previous generation produced by TSMC's 12nm class node. One thing to keep in mind is that Tenstorrent has yet to develop its Blackhole, and its final feature set may differ from what the company disclosed today.

Next year, the company will release its ultimate product: a multi-chip solution called Grendel, with its own Ascalon universal kernel, with its own RISC-V microarchitecture, with an eight-bit decoder, and a small Tensix-based chip for ML workloads.

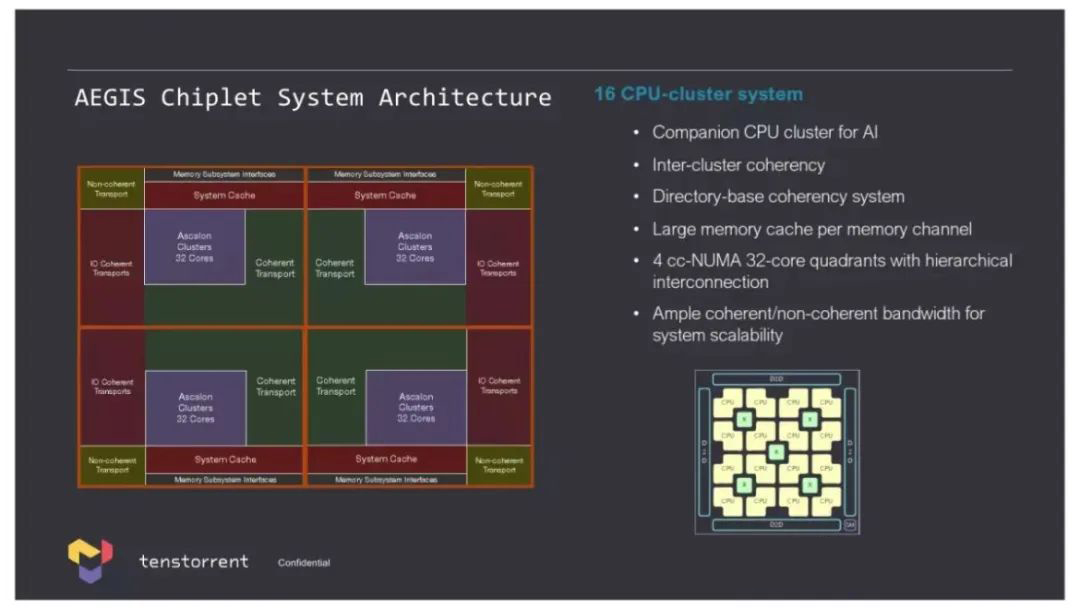

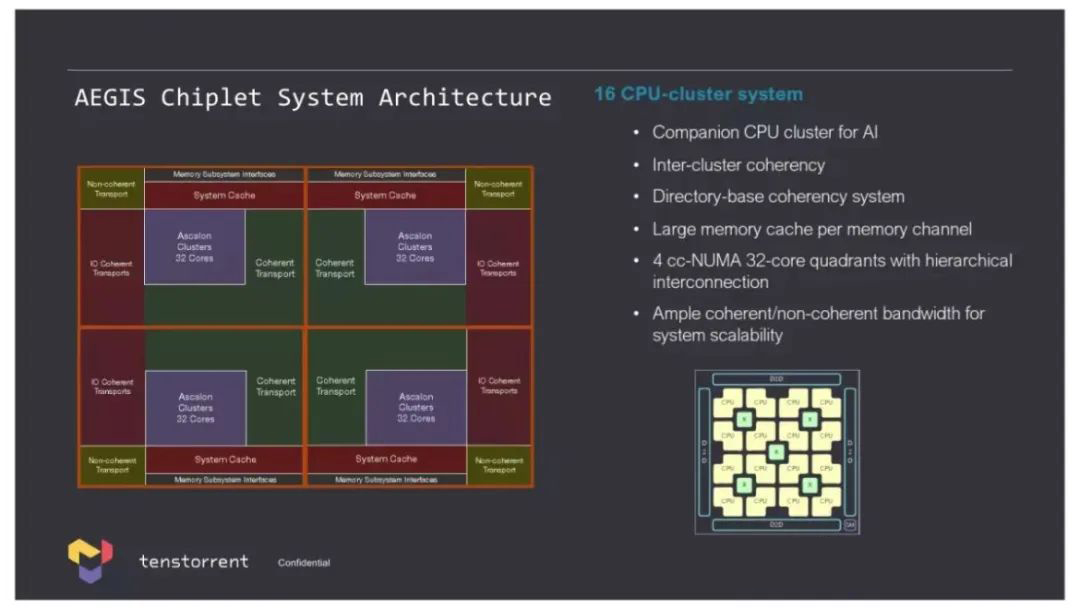

Grendel is Tenstorrent's ultimate product set to be released next year: The multi-Chiplet solution consists of an Aegis chiplet with a high-performance Ascalon universal kernel and one or more chiplet with a Tensix kernel for ML workloads. Depending on business requirements (as well as the company's financial capabilities), Tenstorrent can implement AI chiplet using a 3nm process technology that leverages higher transistor densities and Tensix cores, Or it could continue to use Black Hole chiplet for AI workloads (and even distribute some of the work among the 24 SiFive X280 cores, the company says). Small chips will communicate with each other using the 2TB/s interconnect described above.

The Aegis small chip features 128 general purpose RISC-V eight-width Ascalon cores, organized in four 32-core clusters with inter-cluster consistency, and will be manufactured using 3nm level process technology. In fact, the Aegis CPU small chip will pioneer the use of a 3-nanometer manufacturing process, which could put the company at the forefront of high-performance CPU design.

"The changes [at Tensix] are gradual, but they are there," explains Ljubisa Bajic, the company's founder. "[They added] new data formats, the FLOPS/SRAM capacity change ratio, SRAM bandwidth, on-chip network bandwidth, new sparse features, and general features."

Interestingly, different Tenstorrent slides mention different memory subsystems for the Black Hole and Grendel products. This is because the company is always looking for the most efficient memory technology, and because it licenses DRAM controllers and physical interfaces (PHYs). Therefore, it has some flexibility in choosing the exact type of memory. In fact, Lien says Tenstorrent is also developing its own memory controllers for future products, but for the 2023-2024 solution, it intends to use third-party MCS and PHYs. Also, for its own sake, Tenstorrent doesn't plan to use any fancy memory, such as HBM, at this time.

Business model: Sell solutions and license IP

While Tenstorrent has five different CPU IP addresses (albeit based on the same microarchitecture), But it's only AI/ML products in the pipeline (if you don't consider fully configured servers) using SiFive's X280 or Tenstorrent's eight-wide Ascalon CPU cores. Therefore, it is reasonable to ask why it requires so much CPU core implementation.

The short answer to this question is that Tenstorrent has a unique business model that includes IP licensing (in the form of RTL, hard macros, and even GDSS), selling small chips, selling add-on ML accelerator cards or ML solutions with a CPU and ML small chips, And sell fully configured servers that contain these cards.

Companies building their own SoCs can license the RISC-V core developed by Tenstorrent, and the broad portfolio of CPU IP allows companies to compete for solutions that require different levels of performance and power.

Server vendors can build their machines using Tenstorrent Grayskull and Wormhole accelerator cards or Blackhole and Grendel ML processors. In the meantime, entities that don't want to build hardware can buy pre-built Tenstorrent servers and deploy them.

This business model may seem controversial, because in many cases Tenstorrent competes and will compete with its own customers. However, at the end of the day, vendors like Nvidia offer add-on cards and prefabricated servers based on these motherboards, and companies like Dell or HPE don't seem too worried about this because they offer solutions for specific customers, not just building blocks.

summarize

Tenstorrent jumped into the spotlight about two years ago with the hiring of Jim Keller. Within two years, the company has recruited a group of top notch engineers who are developing high-performance RISC-V kernels for data center level AI/ML solutions and systems. The development team's achievements include the world's first eight-bit RISC-V general-purpose CPU core and appropriate system hardware architecture for AI and HPC applications.

The company has a comprehensive road map that includes high-performance CPU microchips based on RISC-V and advanced AI accelerator microchips that are expected to provide powerful solutions for machine learning. Keep in mind that AI and HPCS are the major megatons that are poised for explosive growth, and providing AI accelerators and high-performance CPU cores seems like a very flexible business model.

The AI and HPC markets are highly competitive, so when you want to compete with established competitors (AMD, Intel, Nvidia) and new players (Cerebras, Graphcore), you have to hire some of the best engineers in the world. Like the big chip developers, Tenstorrent is blessed with its own general-purpose CPU and AI/ML accelerator hardware. At the same time, because the company uses RISC-V ISA, it is currently unable to address some markets and workloads, at least as far as cpus are concerned.